Automation & AI

Email QA Copilot

Email QA has become a structural bottleneck in modern marketing operations. As campaign volume increases and teams scale self-service execution, manual QA remains slow and resource-intensive. This in-progress initiative explores how an intelligent QA agent can systematize and automate these checks. The Zapier prototype packages HubSpot email assets via API, evaluates them against governance and compliance rules, and produces structured, actionable QA reports for marketers.

The Problem

Today’s email campaign workflows rely on human reviewers to manually validate execution details: opening test emails, clicking through links, checking UTMs, and visually inspecting formatting, personalization and layout. While effective, this process is labor-intensive and reactive. Issues such as broken links, missing tracking parameters, accessibility gaps, or rendering problems are often discovered late in the QA cycle, triggering rework and delaying launches.

As campaign volumes increase and self-service execution becomes more common, manual QA becomes a structural bottleneck. Reviews require multiple passes and context switching, and the rigor of checks can vary based on time pressure and reviewer experience. Even when issues are ultimately caught, teams lack confidence that every campaign has been evaluated consistently against the same standards, slowing execution and forcing tradeoffs between speed and certainty.

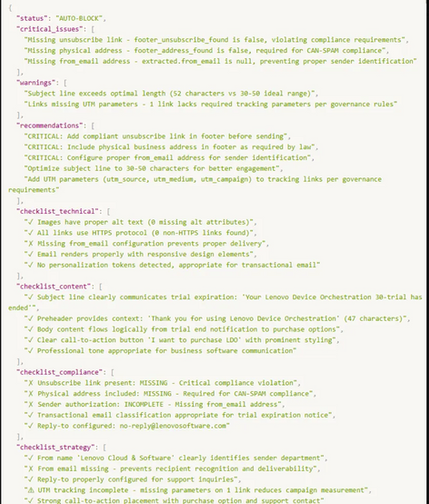

This in-progress initiative explores how an intelligent QA agent can systematize email quality control as part of campaign operations. The prototype automatically packages HubSpot email assets via API, evaluates them against defined governance and compliance rules, and returns structured QA results that clearly distinguish between critical failures, warnings, and recommendations. Rather than replacing human judgment, the agent removes repetitive validation work from the QA cycle and surfaces issues earlier in the build process.

By embedding deterministic QA checks into existing workflows, this approach aims to reduce rework, accelerate launches, and apply standards consistently across all sends. The result is a more scalable and reliable QA model that preserves executional quality and tracking integrity as campaign volume grows.

As campaign volume increases, manual email QA becomes a bottleneck that slows launches and forces late-stage rework.

Quality checks don’t scale when they rely on human memory, time, and availability.

The Solution

01

Automated Asset Packaging and Analysis

Automatically retrieve email HTML and metadata directly from HubSpot via API and package it for automated inspection. This eliminates manual copy-and-paste, ensures the agent evaluates the latest version of the email, and establishes a reliable, repeatable foundation for downstream QA checks.

02

Governance Validation for Links and UTMs

Implement a rules engine that scans every link to confirm functionality, HTTPS usage, and the presence of correctly structured UTM parameters. UTM values are validated against an approved taxonomy to prevent misclassification, enforce consistent naming, and protect downstream attribution and reporting integrity.

03

Rendering and Accessibility Checks

Use targeted rendering and accessibility checks to evaluate how emails display across common desktop and mobile clients. The agent flags layout breaks, missing images or fonts, and accessibility gaps such as missing alt text, allowing marketers to address visual and compliance issues before sending.

04

Structured QA Reporting

Generate standardized, structured QA reports that summarize findings with clear pass, fail, or review indicators. Results include categorized issues and references to affected elements, enabling systematic remediation, faster re-testing, and integration into existing campaign workflows.

This initiative reframes email QA as a system capability by embedding automated validation directly into the campaign workflow.

Quality control shifts from individual reviewers to a repeatable, auditable system.

BUSINESS IMPACT

40-60%

projected reduction in QA cycle time

30-50%

projected reduction in rework loops

90%

expected QA rule coverage